AI Technical SEO Audits: The New Frontier in Site Optimization

Introduction: Why Technical SEO Needs an AI Upgrade

Technical SEO audits have hit a complexity wall. Enterprise sites now contain millions of pages, dynamic content, and intricate architectures that manual auditing simply cannot handle efficiently. Traditional crawlers miss orphaned pages, analysts drown in data, and by the time issues are found, rankings have already suffered.

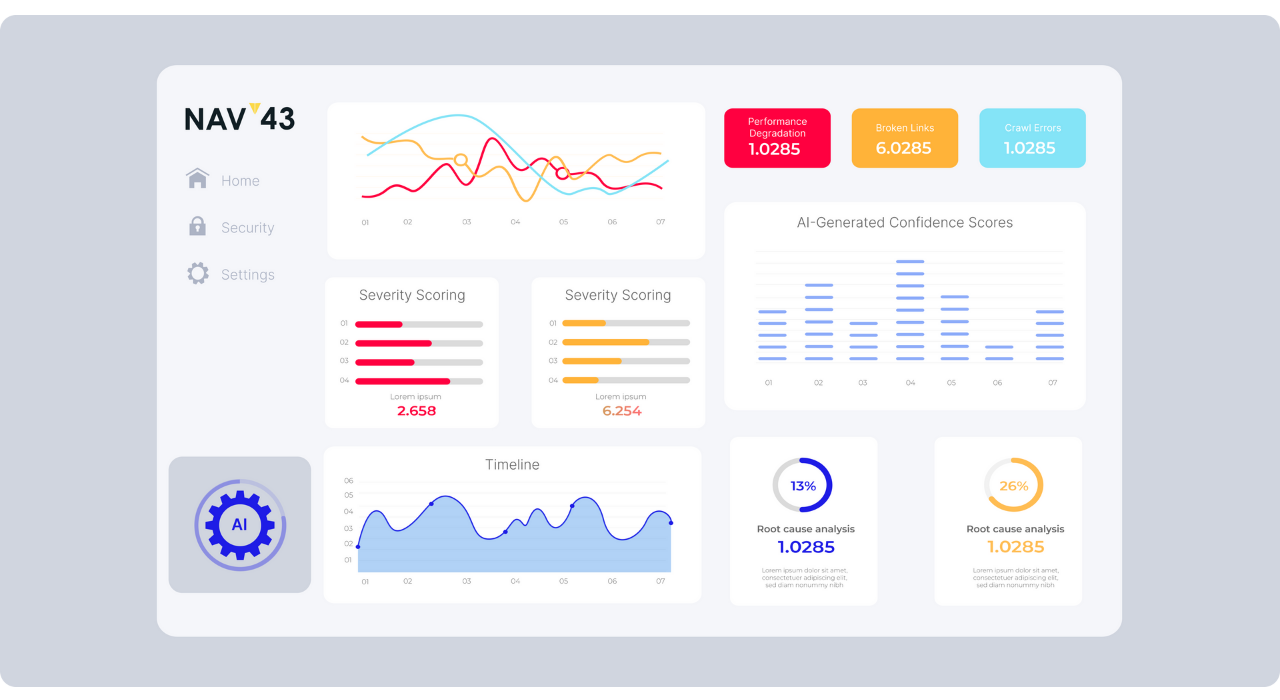

The promise of AI-powered technical SEO audits isn’t just speed – it’s intelligence. Machine learning algorithms can process entire site architectures in hours, detect anomalies human eyes miss, and predict performance degradation before it impacts users. AI-powered audits can also analyze and optimize a website’s infrastructure to ensure effective crawling, indexing, and performance. Modern AI SEO tools don’t just find problems; they understand patterns, prioritize fixes based on impact, and continuously monitor for new issues.

This guide reveals exactly how AI is revolutionizing technical audits, from ML-optimized crawling to predictive performance monitoring. You’ll learn practical implementation strategies, see real results, and discover how to future-proof your technical SEO for Google’s AI-first search landscape.

What Is Technical SEO? Core Pillars & Their Impact on Rankings

Technical SEO forms the foundation that makes your content discoverable and rankable. It’s the infrastructure ensuring search engines can efficiently crawl, understand, and index your site while delivering fast, secure experiences to users. Monitoring technical aspects such as crawlability, indexability, and site speed is crucial for maintaining optimal site health.

The core pillars of technical SEO include:

- Crawlability: Ensuring search engines can access all important pages through logical site architecture, proper internal linking, and optimized robots.txt configurations

- Indexability: Managing which pages appear in search results through canonical tags, noindex directives, and XML sitemaps

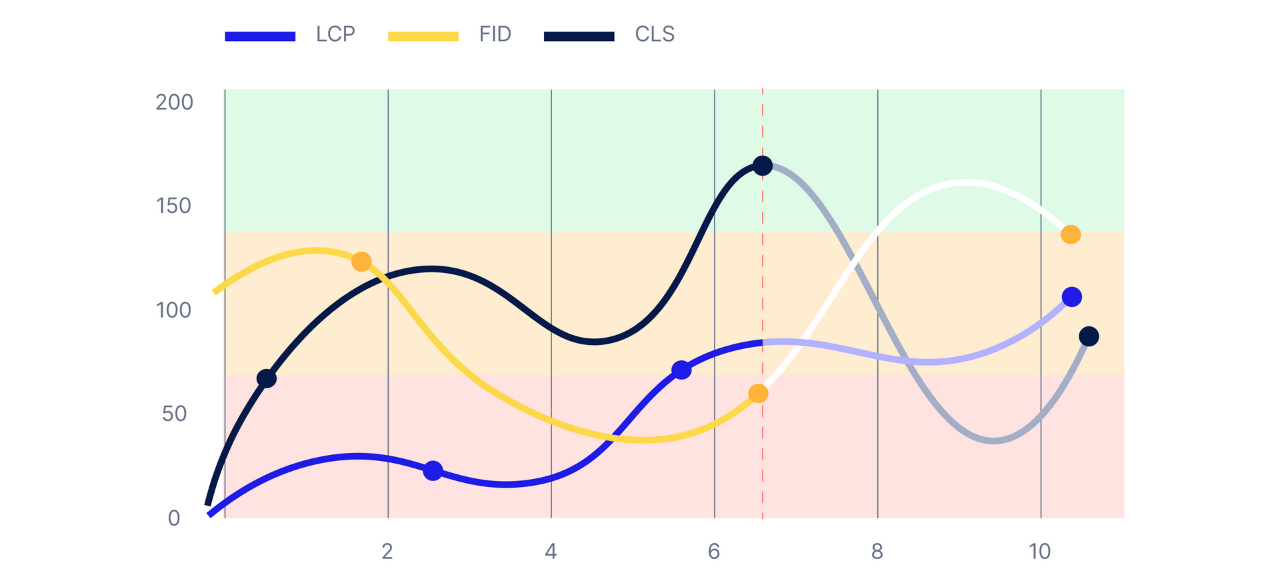

- Site Performance: Optimizing page speed, Core Web Vitals (LCP, FID, CLS), and mobile responsiveness

- Security & Trust: Implementing HTTPS, fixing mixed content issues, and maintaining consistent uptime

- Structured Data: Adding schema markup to help search engines understand content context and enable rich results

Comprehensive site audits help identify and address key aspects of technical SEO across all web pages, ensuring that your site remains healthy and optimized.

These elements directly impact rankings and user experience. Google has confirmed that Core Web Vitals are ranking factors, while proper site architecture determines how effectively PageRank flows through your site. Poor technical health creates a ceiling on your SEO potential – no amount of great content can overcome a site that search engines struggle to crawl or users abandon due to slow load times. The challenge lies in monitoring these factors at scale. A typical enterprise site might have thousands of potential technical issues across millions of URLs. This complexity makes AI-powered auditing not just helpful, but essential for maintaining competitive technical health.

Why Traditional Audits Fall Short in 2025

Manual SEO audits are hitting their limits. What worked for 10,000-page sites breaks down when dealing with modern enterprise platforms containing millions of URLs, dynamic content, and complex JavaScript frameworks. The primary audit limitations include time constraints – a comprehensive manual audit of a large site can take weeks, during which new issues emerge faster than old ones get fixed. Resource allocation becomes problematic when SEO teams spend 80% of their time identifying problems rather than solving them.

Data overload represents another critical challenge. Traditional crawlers generate massive spreadsheets of issues without context or prioritization. Teams waste hours sorting through false positives and minor issues while critical problems hide in the noise. Without sufficient technical knowledge, even experienced SEO professionals may struggle to interpret and act on the overwhelming data produced by traditional audits. Scalability issues mean that manual audits often sample just a fraction of pages, missing site-wide patterns or isolated but significant problems.

Most importantly, enterprise SEO challenges have evolved beyond what manual processes can handle. Modern sites use complex rendering, personalization, and edge computing that traditional audit approaches weren’t designed for. A technical SEO audit analyzes a website’s backend infrastructure and technical issues, which is increasingly difficult with manual methods. Meanwhile, Google’s AI-powered algorithms demand a level of technical precision that sporadic manual audits cannot maintain.

The result? Companies operate with outdated technical insights, react to problems rather than prevent them, and lose competitive advantage to more agile, AI-enabled competitors who can identify and fix issues in real-time.

AI & Machine Learning 101 for SEO Professionals

Machine learning basics for SEO start with understanding pattern recognition. ML models analyze vast amounts of crawl data, user behaviour, and ranking patterns to identify relationships humans would never spot. Unlike rule-based systems, these algorithms improve over time, learning what constitutes a critical issue for your specific site.

Supervised learning trains models on labeled data – for example, teaching an algorithm which technical factors correlate with ranking drops by feeding it historical data. The model then applies these learnings to predict future issues. Unsupervised learning, conversely, finds hidden patterns without predefined labels, perfect for anomaly detection where you don’t know what problems to expect.

Modern AI algorithms for SEO go beyond simple if-then rules. They understand context – recognizing that a 404 error on your homepage requires immediate attention while the same error on an old blog post might be low priority. These systems consider hundreds of factors simultaneously: traffic patterns, link equity, conversion data, and competitive landscape.

Real-world SEO applications include predictive crawling that anticipates which pages need frequent monitoring, natural language processing that understands content quality and gaps, and performance prediction models that forecast Core Web Vitals degradation before users experience it.

The key insight? ML doesn’t replace SEO expertise – it amplifies it. By handling data processing and pattern recognition at superhuman scale, AI frees professionals to focus on strategy, creativity, and high-level optimization decisions that still require human judgment. Machine learning also provides valuable insights that guide ongoing SEO efforts and inform strategic decision-making.

Pre-Audit Foundations: Setting Up Google Search Console for AI Insights

Before diving into AI-powered technical SEO audits, it’s essential to lay the groundwork with Google Search Console (GSC). GSC acts as your direct line to how search engines view your site, providing critical data that fuels both manual and AI-driven SEO strategies. By verifying your website in GSC, you unlock access to a suite of tools designed to monitor search engine rankings, track search performance, and surface technical SEO issues before they impact your visibility.

One of the most valuable features is the URL inspection tool, which allows you to analyze individual pages for indexing status, crawl errors, and technical issues that could hinder search engine visibility. GSC’s Search Analytics report offers deep insights into user behavior, showing which queries drive impressions and clicks, and highlighting opportunities to refine your SEO strategies.

GSC also proactively alerts you to technical issues such as broken links, missing meta tags, or sudden drops in search performance. These alerts are invaluable for maintaining a healthy site and ensuring that technical SEO issues are addressed promptly. When integrated with AI tools, GSC data becomes even more powerful—feeding real-time information into your audit engine and enabling smarter, faster decision-making. Setting up GSC correctly is the first step toward a data-driven, AI-enhanced technical SEO audit that keeps your site competitive in search engine results.

ML-Optimized Crawling: Faster, Deeper, Smarter

Traditional crawlers operate like tourists with outdated maps, methodically visiting every URL regardless of importance. AI site crawl technology fundamentally reimagines this process. Machine learning algorithms analyze your site’s structure, traffic patterns, and update frequency to create intelligent crawl strategies that find issues faster while using fewer resources. Unlike traditional search engine bots, AI-powered crawlers interact with websites in more sophisticated ways, enabling deeper and more efficient analysis of technical SEO factors.

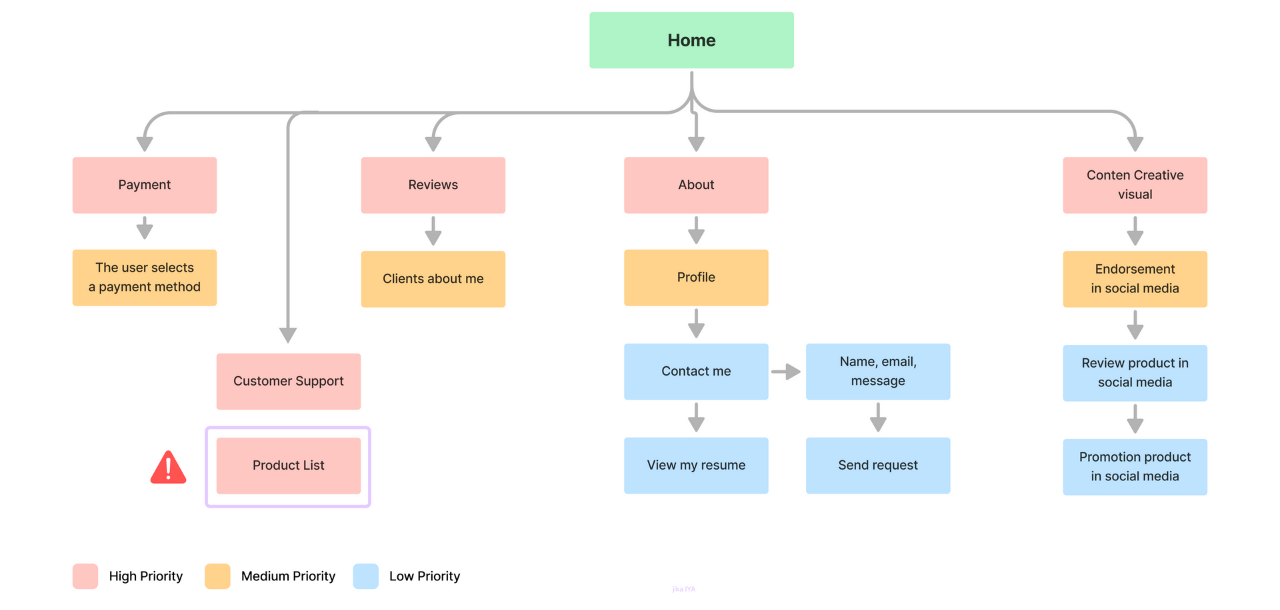

Dynamic crawl scheduling represents a quantum leap in efficiency. Instead of crawling every page equally, ML models identify priority pages based on multiple signals: organic traffic, conversion value, update frequency, and link equity. A product page generating significant revenue gets crawled hourly, while archived blog posts might be checked monthly. This intelligent resource allocation means critical issues surface immediately rather than hiding in crawl queues.

One of AI crawling’s most valuable capabilities is discovering orphan pages – URLs with no internal links that traditional crawlers miss entirely. ML algorithms analyze server logs, sitemaps, and external data sources to identify these isolated pages. Identifying the specific pages affected by technical issues allows for targeted fixes and improved site health. At NAV43, we recently discovered a client had 15,000 orphaned product pages from a failed migration. These pages still received organic traffic but were invisible to standard audits.

Crawl budget optimization becomes particularly powerful at enterprise scale. The AI learns your site’s crawl patterns and Google’s behavior, then adjusts crawl rates dynamically. During high-traffic periods, it might slow crawling to prevent server strain. Optimizing crawl schedules can also improve site speed by reducing server load during peak times. When launching new content, it accelerates crawling of those specific sections. One e-commerce client saw their important pages getting crawled 3x more frequently after implementing ML-optimized crawling, leading to faster indexation of new products.

The AI doesn’t just crawl smarter – it crawls deeper. By understanding site architecture patterns, ML models can predict where issues cluster. If mobile pages in one template show problems, the system prioritizes similar templates for inspection. This pattern recognition catches systemic issues that random sampling would miss.

Real-world results speak volumes. Our ML crawler discovered that a major retailer’s faceted navigation created 2.3 million near-duplicate URLs. Traditional crawling would have taken weeks to map this issue. The AI identified the pattern in hours, traced the root cause, and provided a precise fix that recovered 12% of their crawl budget.

Automated Issue Detection & Anomaly Recognition

The true power of anomaly detection SEO lies in catching problems before they explode. Unlike traditional monitoring that relies on static thresholds, ML-powered systems understand your site’s normal behavior patterns and flag deviations that matter.

Modern AI excels at broken links AI detection, but it goes far beyond simple 404 hunting. The system learns which broken links actually impact user experience and rankings. A broken link in your main navigation gets flagged immediately with high priority. The same error in a 5-year-old blog comment might be logged but deprioritized. This contextual understanding prevents alert fatigue while ensuring critical issues get immediate attention.

Real-time SEO monitoring powered by ML operates like an intelligent security system for your site. Rather than running weekly crawls and discovering problems days later, these systems continuously analyze log files, user behavior data, and search console metrics. When anomalies occur – sudden traffic drops, crawl rate changes, or error spikes – you know within hours, not weeks. AI-powered monitoring can also quickly identify duplicate pages, which often confuses search engines and leads to indexing problems.

Consider BrightEdge Anomaly Detection, which automatically flags “out-of-the-ordinary events” in your SEO performance. The platform doesn’t just notice that organic traffic dropped 20% – it correlates this with other signals to identify root causes. Did a competitor launch new content? Did your page speed degrade? Did Google change its algorithm? This multi-factor analysis transforms raw alerts into actionable insights.

The financial impact of automated detection is substantial. Manual monitoring might catch a redirect loop affecting 1,000 product pages after a week of lost traffic. AI-powered monitoring flags the issue within hours. For a site generating $10,000 daily from those pages, that’s $60,000 in prevented losses from just one caught issue.

Anomaly scoring adds another layer of intelligence. Not all anomalies deserve panic – traffic naturally fluctuates. ML models learn your site’s seasonal patterns, day-of-week variations, and normal volatility. They assign confidence scores to each anomaly, helping teams focus on genuine problems rather than statistical noise. A 10% traffic drop on a typically slow Tuesday might score 2/10, while the same drop on Black Friday would score 9/10.

Classic Audit Essentials: Identifying Broken Links & Assessing Internal Linking

A thorough technical SEO audit always starts with the basics: identifying broken links and evaluating your internal linking structure. Broken links—whether internal or external—can confuse search engines, disrupt user journeys, and dilute your site’s authority. Search engine crawlers encountering broken internal links may struggle to fully index your site, leading to missed ranking opportunities and a diminished user experience.

Using robust audit tools like Screaming Frog SEO Spider, you can quickly scan your website for broken internal links and external links, flagging pages that need immediate attention. But detection is only half the battle. Assessing your internal linking is equally crucial: all the internal links should be strategically placed to ensure that every important page is accessible and that link equity flows efficiently throughout your site.

Optimizing internal linking not only helps search engine crawlers discover and prioritize your most valuable content, but it also supports higher search engine rankings by reinforcing site structure and topical relevance. By addressing broken links and refining your internal linking during a technical SEO audit, you lay a strong foundation for both user experience and search engine performance.

AI for Core Web Vitals & Performance Engineering

Performance monitoring has evolved from periodic snapshots to continuous intelligence. AI-driven monitoring of website’s performance ensures that technical issues are addressed before they impact user experience and search rankings. AI core web vitals optimization leverages real user monitoring (RUM) data to understand performance patterns across devices, locations, and user conditions in ways that lab testing never could.

The integration of real user monitoring with AI creates a continuous feedback loop. Every user interaction provides data about actual performance experienced in the wild. ML models process millions of these data points to identify patterns: certain mobile devices struggling with LCP on product pages, specific geographic regions experiencing high CLS, or particular user paths triggering FID issues.

Predictive degradation alerts represent a breakthrough in performance alerts. Traditional monitoring tells you when performance has already failed. AI predicts when it will fail. By analyzing trends in your LCP optimization metrics, ML models can forecast when a gradually increasing image size or growing JavaScript bundle will push load times past acceptable thresholds.

CLS monitoring through AI reveals patterns invisible to standard tools. The system learns which layout shifts users actually notice versus technical measurements that don’t impact experience. It can differentiate between acceptable shifts (like cookie banners) and problematic ones (product images jumping during load). This nuanced understanding prevents teams from over-optimizing metrics that don’t matter while missing real UX issues.

Developer handoff becomes seamless with AI-powered insights. Instead of vague reports about “slow pages,” AI provides specific, actionable intelligence: “Product template JavaScript execution increased 200ms after the March 15 deployment, specifically the reviewWidget.js module initialization.” Developers receive exactly what they need to fix issues quickly.

NLP-Driven Content Gap Analysis: Bridging Tech & Content

Technical SEO no longer exists in isolation from content strategy. NLP SEO technology bridges these disciplines by analyzing semantic patterns, user intent, and topical coverage at scale. This integration reveals opportunities that neither technical audits nor content audits would find alone.

Content gap analysis powered by natural language processing goes beyond simple keyword matching. ML models understand context, synonyms, and semantic relationships. They analyze your content against top-ranking competitors to identify not just missing keywords, but missing concepts, questions, and user intents.

Consider how semantic SEO analysis uncovered a massive opportunity for a B2B software client. Traditional keyword research showed they ranked well for their main terms. But NLP analysis revealed they completely missed an entire category of “how-to” content that competitors dominated. Their technical documentation was excellent, but they lacked practical implementation guides. This gap represented 40% of search volume in their space.

Topic modeling algorithms cluster related concepts to reveal content architecture opportunities. Rather than creating isolated pages for every keyword variant, NLP identifies natural content hubs and spoke structures. The AI might discover that queries about “email authentication,” “DKIM setup,” and “SPF records” cluster together, suggesting a comprehensive guide rather than scattered blog posts.

Competitor analysis AI takes this further by understanding not just what competitors write about, but how they structure information. The system identifies successful content patterns: do top-ranking pages include comparison tables? Step-by-step tutorials? Video embeds? Optimizing meta tag usage can further enhance the visibility and relevance of content in search results by providing search engines with clear, structured information about each page. This structural analysis informs both content creation and technical implementation.

The workflow from insight to action is straightforward. NLP analysis outputs specific content gaps with search volume data, competitive difficulty scores, and topical relevance ratings. These feed directly into editorial calendars with clear priorities. A gap representing 10,000 monthly searches with low competition gets flagged for immediate action. Missing content for 100 searches might be bundled into a broader piece.

AI-Enhanced Keyword Research: Uncovering New Opportunities

Keyword research is evolving, and AI tools are at the forefront of this transformation. Traditional methods often miss emerging trends and nuanced user intent, but AI-enhanced keyword research can analyze massive datasets to uncover relevant keywords and search patterns that drive real results. By leveraging AI, you can go beyond surface-level keyword lists to understand the context, intent, and behavior behind every search.

AI tools excel at identifying technical SEO issues that impact keyword performance, such as missing meta descriptions, duplicate content, and gaps in meta descriptions across multiple pages. These insights allow you to optimize page content and meta tags for both users and search engines, ensuring your site targets the most valuable and relevant keywords.

Moreover, AI-driven keyword research provides actionable recommendations for improving your technical SEO, from refining keyword usage to addressing on-page issues that may be holding back your rankings. By integrating AI into your keyword research process, you gain a competitive edge—discovering new opportunities, enhancing your content strategy, and driving more targeted traffic to your website.

Using AI Tools for Backlink Analysis

Backlink analysis is a cornerstone of technical SEO, and AI tools are revolutionizing how SEO professionals approach this task. By harnessing the power of AI, you can analyze your backlink profile with unprecedented depth, uncovering patterns in anchor text distribution, link equity, and referring domains that might otherwise go unnoticed.

AI-powered backlink analysis helps you identify toxic backlinks that could harm your search engine rankings, providing clear recommendations for removal or disavowal. These tools also surface new backlink opportunities, such as high-authority resource pages or guest blogging prospects, and offer guidance on how to acquire links that will boost your website’s authority and search engine performance.

With AI tools, backlink analysis becomes more than just a numbers game—it’s a strategic process that informs your overall technical SEO and search engine optimization efforts. By continuously monitoring and optimizing your backlink profile, you can strengthen your site’s authority, improve search engine rankings, and drive more qualified referral traffic.

Preparing for Google SGE & Other AI-First Search Engines

The arrival of search generative experience (SGE) and similar AI-powered search features demands a fundamental rethink of technical SEO. These systems don’t just crawl and index – they understand, synthesize, and generate responses. Sites optimized for traditional search may be invisible to AI-first engines. Optimizing for AI-first search engines can significantly improve search visibility and search rankings, ensuring your content is effectively discovered and prioritized in evolving search results.

AI search optimization starts with understanding how these systems consume information. Unlike traditional crawlers that follow links and parse HTML, AI search models ingest content holistically. They prioritize clear, well-structured information that can be understood and recombined into generated responses.

Structured data accessibility becomes critical in this new paradigm. Many sites add schema markup through JavaScript, assuming search engines will execute it. But most AI crawlers don’t run JavaScript – if your structured data only exists after JS execution, you’re invisible to AI search. The solution? Embed critical schema in server-rendered HTML or implement prerendering to ensure all structured data is immediately accessible.

Code hygiene takes on new importance for AI crawlers. Clean, semantic HTML helps AI understand content hierarchy and relationships. Proper heading structure (H1→H2→H3) isn’t just good practice – it’s how AI models understand information architecture. Tables should use proper semantic markup. Lists should be actual HTML lists, not styled divs.

Prerendering SEO strategies ensure AI crawlers see complete pages. Dynamic content, lazy-loaded images, and client-side rendered elements might never be processed by AI systems. Implementing server-side rendering or static generation for critical content ensures AI search engines can fully understand and index your information.

Here’s a practical checklist for AI search readiness:

- Audit all structured data implementation – ensure it’s in the initial HTML

- Implement proper semantic HTML throughout the site

- Create comprehensive FAQ and Q&A content that directly answers user queries

- Ensure all critical content is accessible without JavaScript execution

- Add detailed schema markup for entities, concepts, and relationships

- Structure content in clear, logical hierarchies that AI can parse

- Optimize for featured snippets – these often feed AI-generated responses

The sites winning in AI-first search aren’t just technically sound – they’re technically transparent. Every piece of information is clearly labeled, properly structured, and immediately accessible. This isn’t just future-proofing; Google has confirmed these factors already influence current rankings as they transition to AI-powered results. Similar optimization strategies can also enhance performance on other search engines adopting AI-driven features.

The Human + AI Workflow: Best Practices & Governance

Success with human-in-the-loop SEO requires clear role definition. AI excels at data processing, pattern recognition, and continuous monitoring. Humans provide strategy, context, and creative problem-solving. The most effective workflows leverage both strengths without overlap or conflict.

AI governance in SEO starts with establishing clear decision boundaries. AI can identify that 500 pages have slow load times and rank them by traffic impact. But humans decide whether to fix all 500, focus on the top 50, or redesign the underlying template. This division prevents both analysis paralysis and blind automation.

Audit prioritization frameworks like RICE (Reach, Impact, Confidence, Effort) or ICE (Impact, Confidence, Ease) work perfectly with AI-generated insights. The AI provides objective metrics for each factor: reach based on traffic data, impact from historical correlation analysis, confidence from pattern matching across similar issues. Human expertise then weighs these factors against business goals and resource constraints.

Managing AI false positives requires systematic quality assurance. Every AI system generates some incorrect alerts – the key is learning from them. When analysts mark an alert as false positive, that feedback trains the model. Over time, the system becomes more accurate for your specific context. One client reduced false positive rates from 15% to 3% over six months through consistent feedback loops.

Effective governance also means establishing escalation protocols. Critical issues identified by AI – like complete crawl blocks or site-wide performance failures – trigger immediate human review. Lower-priority findings might accumulate for weekly review sessions. This tiered approach ensures urgent problems get immediate attention while preventing alert fatigue.

Documentation becomes crucial in human+AI workflows. Every AI recommendation should include transparency about its reasoning: what patterns triggered this alert? What historical data supports this priority level? This documentation helps humans make informed decisions and builds trust in AI recommendations over time. Integrating AI-generated findings into SEO reporting ensures that all stakeholders have access to transparent, actionable data.

The most successful implementations treat AI as a team member, not a tool. Regular “performance reviews” assess the AI’s accuracy, identify areas for improvement, and celebrate successes. This mindset shift from “using AI” to “working with AI” drives better outcomes and team adoption.

Implementation Roadmap: Bringing AI Audits In-House or Via Agency

Choosing between building internal AI capabilities or partnering with an AI-enabled agency depends on your scale, resources, and technical complexity. Both paths can succeed with proper planning.

AI SEO tools selection starts with defining your needs. High-traffic sites need real-time monitoring capabilities. JavaScript-heavy sites require rendering analysis. International sites benefit from multi-language NLP capabilities. Create a requirements matrix mapping your technical challenges to tool capabilities before evaluating options. When comparing different SEO audit tools, consider how they integrate with other SEO tools and platforms like Google Analytics to ensure comprehensive site analysis and reporting.

The build vs buy decision often comes down to expertise and speed. Building in-house AI capabilities requires data scientists, ML engineers, and significant infrastructure investment. The advantage? Complete customization and data control. The challenge? 12-18 month development cycles and ongoing maintenance costs. Buying or partnering provides immediate capabilities but less customization.

Audit rollout plan should be phased for manageable adoption:

- Phase 1 (Weeks 1-4): Deploy AI crawling on critical site sections, establish baselines

- Phase 2 (Weeks 5-8): Activate anomaly detection and performance monitoring

- Phase 3 (Weeks 9-12): Implement NLP content analysis and gap identification

- Phase 4 (Ongoing): Continuous optimization and model training

Stakeholder alignment requires translating technical capabilities into business value. Don’t pitch “ML-powered anomaly detection” – explain how you’ll “prevent revenue loss by catching issues 10x faster.” Create dashboards that show both technical metrics and business impact to maintain executive support.

Integration considerations include connecting AI insights with existing workflows. The best AI audit data is worthless if it sits in another silo. Ensure your chosen solution integrates with project management tools, development workflows, and reporting systems your team already uses.

Prioritizing Technical SEO Fixes: From Audit to Action

After completing a thorough technical SEO audit, the next step is turning insights into action. Prioritizing technical SEO fixes is essential for maximizing your website’s search engine visibility and delivering a seamless user experience. AI tools play a pivotal role here, helping you analyze key data points—such as core web vitals metrics, keyword usage, and site structure—to determine which issues will have the greatest impact on search engine rankings.

Start by addressing critical issues like broken links, missing meta tags, and core web vitals failures, as these can significantly hinder your site’s performance in search results. Use AI-driven insights to identify which fixes will deliver the highest ROI, whether it’s optimizing internal linking, restructuring your site architecture, or refining meta tags for better relevance.

By leveraging AI tools to prioritize and implement technical SEO fixes, you ensure that your SEO strategies are data-driven and focused on what matters most. This approach not only improves your site’s health and search engine rankings but also supports long-term SEO success by continuously adapting to new challenges and opportunities.

Conclusion: Future-Proof Your Technical SEO Strategy Now

The future of SEO isn’t coming – it’s here. Sites still relying on quarterly manual audits are competing with one hand tied behind their back. AI technical audits deliver speed, scale, and intelligence that manual processes simply cannot match.

The benefits are clear: 10x faster issue detection, comprehensive coverage instead of sampling, predictive insights that prevent problems, and continuous optimization rather than periodic fixes. Early adopters are already seeing 15-25% improvements in organic performance through AI-powered technical optimization.

Your next-gen SEO strategy should embrace AI augmentation while maintaining human strategic oversight. Start with pilot projects on high-value site sections. Build expertise gradually. Measure results rigorously. The sites winning tomorrow’s AI-powered search landscape are investing in AI-powered optimization today.

Ready to explore how AI can transform your technical SEO? Contact NAV43 to discuss a custom AI audit strategy for your site, or explore our comprehensive technical SEO audit services to see AI-powered optimization in action.

-

Modern AI audit tools achieve 95-98% accuracy for technical issue detection, surpassing manual audits which typically catch only 60-70% of issues due to sampling limitations. The key difference isn't just accuracy – it's scale. AI can audit millions of pages with consistent precision, while manual audits rely on sampling.

-

No. Modern AI SEO platforms are designed for SEO professionals, not data scientists. They include pre-trained models and intuitive interfaces. However, having technical SEO expertise remains crucial for interpreting results and planning fixes

-

AI augments rather than replaces SEO professionals. While AI excels at data processing and pattern recognition, strategic thinking, creative problem-solving, and stakeholder communication remain distinctly human skills. AI handles the "what" – humans determine the "why" and "how."

-

AI enables continuous monitoring rather than periodic audits. Critical metrics should be monitored in real-time, comprehensive crawls can run weekly, and deep analysis might occur monthly. This shift from periodic to continuous auditing prevents issues from festering between manual reviews.