How to Perform a Technical SEO Audit in 7 Actionable Steps

Last month, I received a panicked call from a client whose organic traffic had dropped 40% overnight. After digging into their site, I discovered over 10,000 pages returning 404 errors and their Core Web Vitals failing across the board. Their development team had pushed a major update without considering the SEO implications.

This scenario plays out more often than you’d think. In fact, 61% of marketers say improving SEO and growing their organic presence is their top inbound marketing priority. Yet many sites are bleeding traffic from technical issues they don’t even know exist.

A technical SEO audit examines the infrastructure of your website – the crawlability, indexability, and performance factors that determine whether search engines can properly access and understand your content. As a core component of search engine optimization, a technical SEO audit analyzes the technical aspects that influence how search engines crawl and index your site. Unlike on-page SEO (which focuses on content optimization) or off-page SEO (which deals with backlinks and authority), technical SEO ensures your site’s foundation is solid.

This guide walks you through a proven 7-step process to identify and fix site crawl issues that could be sabotaging your rankings. The audit process evaluates how search engine crawlers discover, crawl, and index your web pages, ensuring there are no obstacles that could hinder their effectiveness. It also covers how structured data and schema markup help search engines understand your website content, improving visibility and rich snippet opportunities. By the end, you’ll have a clear roadmap to perform your own SEO health check and prioritize fixes that actually move the needle.

Introduction: What is a Technical SEO Audit and Why Does It Matter?

A technical SEO audit is a deep-dive analysis of your website’s technical aspects to ensure it’s fully optimized for search engines. Unlike content or link-focused SEO audits, a technical SEO audit zeroes in on the behind-the-scenes elements that determine whether search engine bots can effectively crawl, index, and understand your site. This process uncovers technical issues—like crawl errors, slow site speed, broken links, or misconfigured meta tags—that can quietly undermine your search engine rankings and limit your visibility on search engine results pages.

Why does this matter? Search engines rely on a technically sound foundation to accurately interpret and rank your content. Even the best-written pages can be invisible in search engine results if technical issues block search engine bots or confuse their algorithms. Regular technical SEO audits are essential for maintaining strong search engine visibility, driving organic traffic, and ensuring your site performs at its best. By proactively identifying and fixing technical issues, you lay the groundwork for higher rankings, better user experience, and long-term SEO success.

Impact of Technical Debt on Rankings & Revenue

Technical SEO isn’t just about pleasing search engines – it directly impacts your bottom line. Consider these sobering statistics:

- A 1-2 second delay in page load time can reduce conversions by 2-4%

- 53% of mobile users abandon sites that take over 3 seconds to load

- Bounce rates double when page load time increases from 1 to 3 seconds

The site’s speed is a critical factor not only for user experience but also for SEO rankings, as search engines prioritize fast-loading websites.

I’ve seen firsthand how technical SEO errors compound over time. One e-commerce client was losing $50,000 per month in potential revenue because their product pages were stuck in redirect loops.

Technical debt accumulates silently, but its effects on rankings and revenue are anything but subtle. Technical issues such as crawl errors, slow load times, and broken links can significantly harm your site’s performance and overall website’s performance.

The SEO revenue impact extends beyond lost traffic. When users encounter broken pages, slow load times, or mobile usability issues, they lose trust in your brand. This damage to user experience creates a negative feedback loop – poor engagement signals tell Google your site isn’t worth ranking highly. Technical SEO issues can also harm your organic search performance and overall SEO performance, making it harder for your site to achieve and maintain strong visibility in search results.

Page speed conversion rates are particularly critical for e-commerce and lead generation sites. Every millisecond counts when users have countless alternatives just a click away.

Audit Prep: Tools, Access & Benchmarking

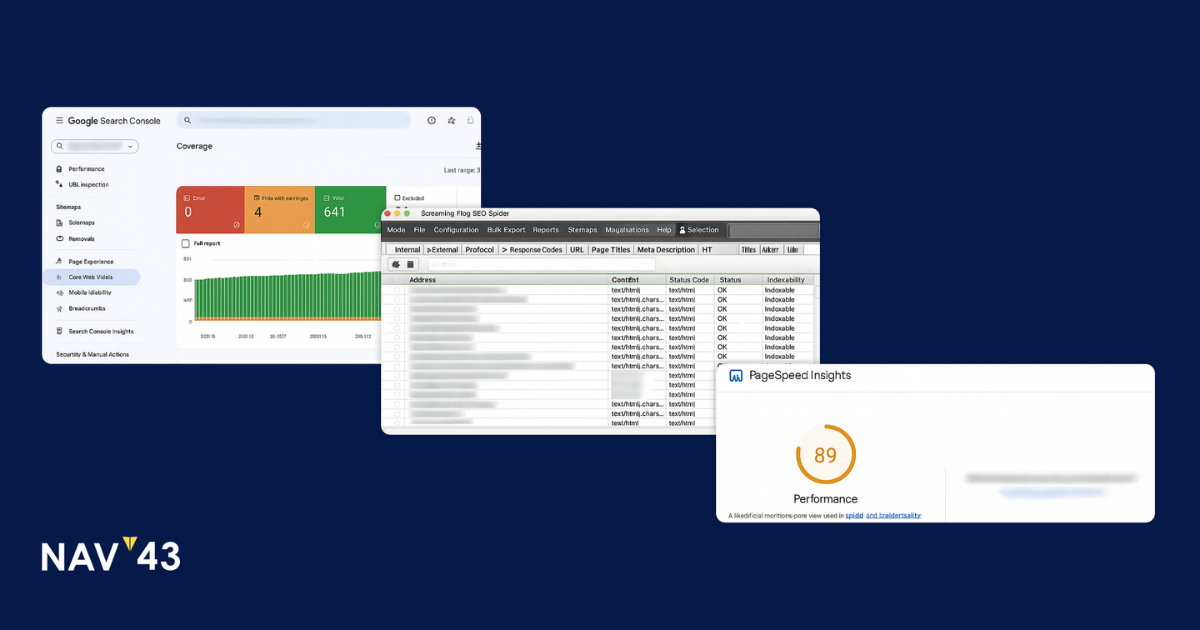

Before diving into your audit, you need the right technical SEO tools and baseline metrics. Here’s your essential toolkit:

Choosing the right SEO audit tools and properly configuring your site audit settings is crucial for an effective audit. These steps ensure you can identify, prioritize, and address technical issues efficiently.

- Google Search Console: Free, direct from Google, for indexing, crawl errors, and performance.

- Screaming Frog SEO Spider: Paid, for in-depth crawling and technical analysis.

- Ahrefs/Semrush/Moz: Paid, for backlink analysis, keyword tracking, and site health.

A site audit tool can help you automatically identify, diagnose, and fix technical SEO issues, improving your website’s health and search ranking.

Set up Google Search Console for your site and verify ownership.

Integrate Google Analytics to track website performance, monitor user behavior, and gather data for comprehensive SEO reporting during your audit.

These technical SEO audits and the use of these tools play a key role in supporting your broader digital marketing strategy.

Free Tools:

- Google Search Console – Your window into how Google sees your site

- Screaming Frog SEO Spider – Free for up to 500 URLs

- Google PageSpeed Insights – Core Web Vitals and performance data

- Chrome DevTools – Built-in browser diagnostics

Some of these tools can be set up to perform automatic site audits on a weekly or monthly basis, helping you catch technical SEO issues early and maintain your website’s health.

Paid Tools:

- Screaming Frog (paid version) – Unlimited crawling with advanced features

- Sitebulb – Visual site architecture mapping

- Ahrefs or SEMrush – Comprehensive SEO suites with technical modules

- DeepCrawl or Botify – Enterprise-level crawling for massive sites

SEO agencies often rely on these advanced tools to conduct thorough technical SEO audits for their clients.

For Google Search Console setup, ensure you’ve verified all property variations (www/non-www, HTTP/HTTPS). Add your XML sitemap and check that tracking is properly configured. This free tool provides invaluable data directly from Google’s index.

The Screaming Frog tutorial on their site offers excellent configuration guidance. Start with these key settings:

- Set User-Agent to Googlebot

- Respect robots.txt directives

- Configure crawl speed to avoid overloading your server

- Import your XML sitemap for comparison

Before starting your audit, benchmark these metrics:

- Current organic traffic levels

- Number of indexed pages in Google

- Average page load time

- Core Web Vitals scores

- Current ranking positions for target keywords

Document these baselines – you’ll measure improvement against them later.

Step 1 – Run a Full Site Crawl

A comprehensive site crawl is a core part of any technical SEO site audit and forms the foundation of your technical audit. This process simulates how search engines discover and analyze your pages.

Start by configuring your SEO spider properly. In Screaming Frog:

- Enter your homepage URL in the search bar

- Navigate to Configuration > Spider > Limits and set appropriate constraints

- Enable JavaScript rendering if your site uses it heavily

- Configure the crawl to store images, CSS, and JavaScript files

For large sites, implement crawl budget optimization strategies:

- Start with critical sections first (main navigation pages, top products/services)

- Use URL pattern filtering to exclude low-value pages temporarily

- Schedule crawls during off-peak hours to minimize server impact

- Consider segmenting the crawl by subdomain or directory

As the crawl runs, the spider discovers every accessible web page, following all the internal links just like Googlebot would. It’s important to track how many pages are crawled and ensure that all the internal links are being followed to maximize site coverage. The tool collects vital data including:

- Response codes (200, 301, 404, 500, etc.)

- Page titles and meta descriptions

- Heading structure (H1s, H2s, etc.)

- Internal and external links

- Images and their attributes

- Canonical tags and directives

- Page size and load time

When reviewing the data, analyze your web pages for technical issues that could impact SEO performance.

Once complete, export the crawl data to CSV or Excel. Create separate tabs for different issue types – this organization helps when prioritizing fixes later. When exporting, review the list of pages crawled to identify how many pages were discovered and to spot any missing or orphaned web pages. Pay special attention to:

- Crawl depth – Pages requiring many clicks from the homepage

- Orphan pages – Pages with no internal links pointing to them

- Redirect chains – Multiple consecutive redirects wasting crawl budget

- Blocked resources – CSS/JS files accidentally blocked by robots.txt

For sites exceeding Screaming Frog’s free 500-URL limit, prioritize crawling your most important sections first, or invest in the paid version for unlimited crawling.

Step 2 – Inspect Index Coverage in GSC

While your crawler shows what’s technically accessible, Google Search Console reveals what’s actually in Google’s index. The index coverage report is your diagnostic goldmine.

Navigate to the “Pages” report (formerly “Coverage”) in GSC. You’ll see four categories:

- Indexed – Pages successfully in Google’s index

- Excluded – Pages Google chose not to index

- Error – Pages with serious indexing problems

- Valid with warnings – Indexed but with potential issues

Common Google Search Console errors include:

- Submitted URL marked ‘noindex’ – Your sitemap includes pages you’ve told Google not to index

- Submitted URL blocked by robots.txt – Sitemap/robots.txt conflict

- Redirect error – Broken redirects or redirect loops

- Server error (5xx) – Your server failed when Google tried to crawl

- Submitted URL not found (404) – Dead pages still in your sitemap

- Duplicate pages – Multiple URLs with identical or very similar content can confuses search engines, making it unclear which version to index and potentially leading to indexing problems.

Understanding noindex vs. robots.txt is crucial. Many site owners confuse these directives:

- Robots.txt blocks crawling entirely – Google can’t even see the page content

- Noindex allows crawling but prevents indexing – Google sees the page but won’t show it in results

Use the URL Inspection tool to diagnose specific pages. This shows exactly how Google sees any URL, including:

- Last crawl date

- Indexing status

- Any blocking directives

- Canonical URL Google selected

- Mobile usability status

For pages incorrectly excluded, fix the underlying issue and use the “Validate Fix” button. This prompts Google to recrawl affected pages and can accelerate reindexing. Resolving index coverage issues, such as duplicate pages, can improve your site’s appearance and performance in search results.

Step 3 – Validate Site Architecture & XML Sitemaps

Your site architecture SEO determines how effectively search engines and users navigate your content. A logical structure distributes link equity efficiently and ensures important pages get discovered.

Start your XML sitemap audit by checking these elements:

- Completeness – Does your sitemap include all important pages?

- Accuracy – Are listed URLs actually live and indexable?

- Size limits – Each sitemap file must stay under 50MB or 50,000 URLs

- Update frequency – Does the lastmod date reflect actual changes?

- Priority values – Are you using priority scores effectively?

Compare your crawled URLs against your sitemap to identify orphaned pages – these are pages on your website with no incoming internal links. Orphaned pages are problematic for SEO because they are isolated from your site’s internal linking structure, making them harder for search engines to discover and reducing their ability to gain page authority. These pages rely entirely on external links or direct traffic, missing out on internal link equity.

I recently audited a SaaS site with 400+ orphaned pages – mostly valuable blog posts and case studies. By incorporating these into the site architecture through strategic internal linking, organic traffic to those pages increased by 73% within two months.

Check for problematic URL patterns:

- Parameter-based duplicates (?sort=price, ?color=red)

- Print versions creating duplicate content

- Session IDs appended to URLs

- Multiple paths to the same content

For international sites, validate hreflang implementation in your sitemap. Common mistakes include:

- Missing return tags (each language must reference all others)

- Incorrect language/country codes

- Pointing to redirected or non-existent pages

- Conflicting hreflang and canonical tags

Use Google’s Search Console International Targeting report to verify hreflang tags are working correctly.

Step 4 – Audit On-Page Elements (Titles, Canonicals, Images)

On-page elements might seem basic, but duplicate title tags and missing meta descriptions still plague many sites. These on-page problems are common technical SEO issues that can impact crawlability and rankings. Your crawl data reveals these issues at scale.

For title tags, identify:

- Duplicates – Multiple pages with identical titles confuse search engines

- Missing titles – Pages without any title tag

- Length issues – Titles over 60 characters get truncated

- Non-descriptive titles – Generic titles like “Home” or “Page 2”

Apply meta description best practices:

- Keep under 155 characters to avoid truncation

- Include target keywords naturally

- Write compelling copy that encourages clicks

- Make each description unique and page-specific

- Include a clear call-to-action when appropriate

Canonical tag issues deserve special attention. Common problems include:

- Self-referencing canonicals pointing to redirected URLs

- Canonical chains (A → B → C)

- Cross-domain canonicals without proper implementation

- Missing canonicals on duplicate content

For images, proper image alt text SEO serves both accessibility and search optimization:

- Describe the image accurately and concisely

- Include relevant keywords where natural

- Don’t stuff keywords or repeat the same alt text

- Leave decorative images with empty alt attributes (alt=””)

Image optimization extends beyond alt text:

- Compress images to reduce file size (aim for under 100KB)

- Use next-gen formats (WebP, AVIF) with fallbacks

- Implement lazy loading for below-fold images

- Specify width and height attributes to prevent layout shift

Step 5 – Evaluate Core Web Vitals & Page Speed

Google’s Core Web Vitals audit examines three specific metrics that significantly impact rankings and user experience. Core Web Vitals metrics are key indicators of site performance and user experience, and understanding these metrics is crucial for modern SEO.

Largest Contentful Paint (LCP) measures loading performance – specifically, how long it takes for the largest content element to render. Google’s threshold for “good” LCP is 2.5 seconds or less. Common LCP elements include:

- Hero images or videos

- Large text blocks

- Background images loaded via CSS

First Input Delay (FID) – now replaced by Interaction to Next Paint (INP) – measures interactivity. It tracks the delay between user interaction and browser response. The threshold for good INP is 200 milliseconds or less. Each core web vital measures a specific aspect of how users interact with your site, such as responsiveness and engagement.

Cumulative Layout Shift (CLS) quantifies visual stability. Unexpected layout shifts frustrate users and hurt conversions. Aim for a CLS score of 0.1 or less.

To improve page speed, implement these proven optimizations:

- Enable text compression – Gzip or Brotli can reduce file sizes by 70%+

- Leverage browser caching – Set appropriate cache headers for static resources

- Minimize render-blocking resources – Defer non-critical CSS/JavaScript

- Optimize server response time – Target under 200ms TTFB

- Use a CDN – Distribute content closer to users geographically

- Implement resource hints – Preconnect, prefetch, and preload critical resources

It’s important to regularly test site speed using tools like PageSpeed Insights to monitor and improve your Core Web Vitals metrics.

Lab data from tools provides controlled testing, but field data from real users matters more.

Check both in PageSpeed Insights:

- Field Data – Actual user experience from Chrome User Experience Report

- Lab Data – Simulated performance in controlled conditions

If field data shows poor performance but lab data looks good, investigate:

- Geographic performance variations

- Device/connection speed differences

- Third-party scripts loading inconsistently

- CDN or server performance issues

Step 6 – Security, HTTPS & Mobile Usability

Security and mobile-friendliness aren’t optional – they’re fundamental ranking factors. Start your HTTPS SEO check by verifying:

- All pages use HTTPS (not HTTP)

- SSL certificate is valid and not expired

- No mixed content warnings (HTTPS pages loading HTTP resources)

- Proper redirects from HTTP to HTTPS versions

- HSTS (HTTP Strict Transport Security) is implemented

Mixed content particularly damages user trust. Browsers show security warnings when HTTPS pages load insecure resources. Use your crawler or Chrome DevTools to identify:

- Images served over HTTP

- Scripts or stylesheets using insecure protocols

- Embedded content (videos, iframes) from HTTP sources

For your site security audit, check Google Search Console’s Security Issues report. Google will flag:

- Malware infections

- Deceptive pages

- Hacked content

- Social engineering attempts

Address mobile usability errors using Google’s Mobile-Friendly Test and Search Console’s Mobile Usability report. Common issues include:

- Viewport not configured – Add meta viewport tag for responsive design

- Text too small to read – Use minimum 16px font size

- Clickable elements too close – Maintain 48px minimum tap targets

- Content wider than screen – Avoid horizontal scrolling

It’s essential to optimize your site for mobile devices to ensure a positive user experience and improve your search rankings.

Remember that Google uses mobile-first indexing for all new sites. Your mobile version is what Google primarily crawls and indexes, making mobile optimization critical for rankings.

Step 7 – Prioritize Fixes, Report & Implement

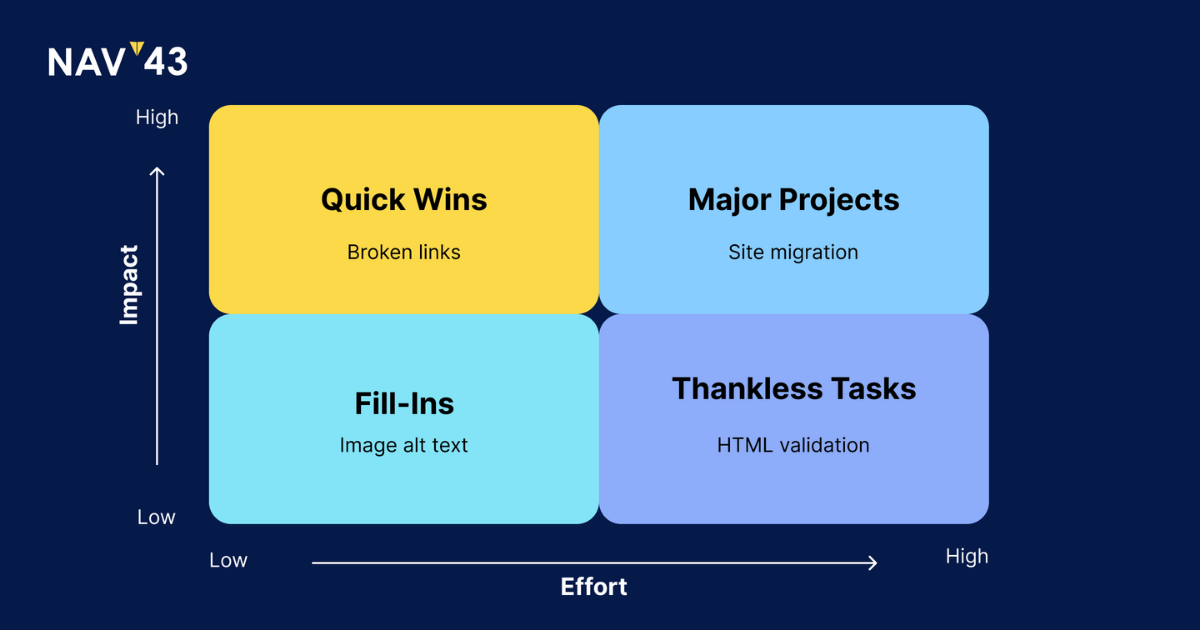

With your audit complete, you need a systematic approach to fixing issues. Create an SEO prioritization matrix using two factors:

- Impact – How much will fixing this improve rankings/traffic?

- Effort – How much time/resources does the fix require?

Plot issues on a 2×2 grid:

- High Impact, Low Effort – Quick wins to tackle immediately

- High Impact, High Effort – Major projects requiring planning

- Low Impact, Low Effort – Handle during routine maintenance

- Low Impact, High Effort – Deprioritize or ignore

Your technical SEO report should include:

- Executive Summary – Key findings and business impact

- Priority Issues – Top 10-15 fixes with clear explanations

- Detailed Findings – Complete issue list organized by type

- Implementation Guide – Specific steps to fix each issue

- Success Metrics – How you’ll measure improvement

Common quick wins to fix 404 errors and other issues:

- Implement 301 redirects for broken URLs with inbound links

- Update internal links pointing to redirected pages

- Remove or update outdated sitemap entries

- Fix broken image links

- Resolve redirect chains and loops

Assign clear ownership for each fix:

- Development team – Server configs, page speed, technical implementations

- Content team – Title tags, meta descriptions, alt text

- SEO team – Canonicals, schema markup, strategic decisions

After implementing fixes, use Google Search Console’s validation tools to confirm resolution. Monitor rankings and traffic to measure impact.

International SEO Considerations: Ensuring Global Visibility

If your website targets audiences in multiple countries or languages, international SEO should be a core part of your technical SEO audit. International SEO focuses on optimizing your site so that search engines can serve the right content to users in different regions, boosting your search engine rankings and visibility worldwide.

A thorough technical SEO audit for international sites goes beyond standard checks. It involves verifying that hreflang tags are correctly implemented to signal language and regional targeting to search engines, ensuring your XML sitemap includes all localized versions of your pages, and reviewing meta tags for accurate language and country codes. Internal linking should also be optimized to help both users and search engine bots navigate between different language or regional sections of your site.

By addressing these international SEO elements during your technical SEO audit, you can prevent common pitfalls like duplicate content across regions, misdirected traffic, or poor indexation of localized pages. Regular technical SEO audits help you adapt your SEO strategy for global markets, maximize your site’s search engine visibility, and capture more organic traffic from around the world.

Continuous Monitoring & Audit Cadence

Technical SEO requires ongoing vigilance. Establish an SEO monitoring system to catch issues before they impact rankings.

Set up automated SEO alerts for:

- Sudden drops in indexed pages

- Spike in crawl errors

- Core Web Vitals degradation

- Security warnings

- Mobile usability issues

For your recurring site audit schedule:

- Weekly – Check Search Console for new errors

- Monthly – Mini-audit of high-priority pages

- Quarterly – Full technical audit of entire site

- Post-deployment – Immediate checks after any major changes

Large e-commerce sites or news publishers might need more frequent audits due to constant content changes. Smaller, static sites can extend to semi-annual full audits.

Track these metrics over time:

- Number of indexed pages

- Average crawl budget utilization

- Core Web Vitals pass rate

- 4xx and 5xx error trends

- Page speed improvements

Regularly monitor site performance—including load times, mobile responsiveness, and other key metrics—to ensure ongoing SEO success and maintain strong search engine rankings.

Quick-Win Checklist & Common Pitfalls

Here’s your technical SEO checklist for immediate improvements:

SEO quick wins you can implement today:

- Submit an updated XML sitemap to Google Search Console

- Fix broken internal links identified in your crawl

- Add missing title tags and meta descriptions

- Compress images over 100KB

- Implement 301 redirects for deleted pages with backlinks

- Add structured data markup for rich results

- Enable Gzip compression on your server

- Fix mobile viewport configuration

Avoid these common mistakes when fixing crawl errors:

- Blindly blocking crawl paths – Understand what you’re blocking and why

- Over-optimizing robots.txt – Don’t block CSS/JS needed for rendering

- Ignoring redirect chains – Fix the source, don’t add more redirects

- Focusing only on homepage speed – Test templates and high-traffic pages

- Fixing symptoms, not causes – Address why issues keep recurring

Conclusion & Key Takeaways

A comprehensive technical SEO audit uncovers the hidden issues sabotaging your organic performance. By following these seven steps, you’ll identify and fix the crawl errors, performance problems, and indexing issues that prevent your content from ranking.

Remember these technical SEO best practices:

- Regular audits prevent small issues from becoming major problems

- Focus on high-impact fixes that improve both user experience and crawlability

- Use data to prioritize – not all issues deserve immediate attention

- Document your process for consistency and knowledge transfer

Your SEO audit summary should drive action. Transform your findings into a clear implementation roadmap with assigned owners and deadlines. Monitor the impact of your fixes and adjust your approach based on results.

Ready to Uncover Hidden SEO Errors?

Running a thorough technical audit takes time, expertise, and the right tools. If you’re struggling to find the bandwidth or decode the data, you’re not alone. Many businesses discover that the opportunity cost of DIY audits exceeds the investment in professional help.

Download our 120-point Technical SEO Audit Checklist and start fixing issues today. This free SEO audit template includes:

- Step-by-step audit worksheet with checkboxes

- Priority scoring matrix for ranking fixes

- Tool configuration guides for Screaming Frog and GSC

- Sample reporting templates

- Common fix implementation instruction

Stop leaving rankings on the table due to technical issues. Get your technical SEO audit checklist and take control of your site’s foundation.

Want expert eyes on your site instead? Our technical SEO team can conduct a comprehensive audit and provide a prioritized fix roadmap. We’ll handle the heavy lifting while you focus on growing your business.